S3 View and Copy

I’ve used Cyberduck for years as an S3 viewer for files in Digital Ocean Spaces. The main problem has been selecting a large number of files and downloading them.

For choices in s3 storage see this article

S3 Browser

So I looked at alternatives, and found that important features such as Download To are not available in the free version.

So quickly moved onto:

rclone

This is a command line tool (and GUI) which I’m using from WSL2 on Windows.

# 1.71.0 on 15th Sept 25

sudo -v ; curl https://rclone.org/install.sh | sudo bash

# GUI is the web browser (but I prefer command line)

rclone rcd --rc-web-gui

#~/config/rclone/rclone.conf

# Digital Ocean Frankfurt (my default)

[do-first-project]

type = s3

provider = DigitalOcean

env_auth = false

access_key_id = XXXXXX

secret_access_key = XXXXX

region = fra1

endpoint = fra1.digitaloceanspaces.com

# Digital Ocean New York

[do-first-project-nyc3]

type = s3

provider = DigitalOcean

env_auth = false

access_key_id = XXX

secret_access_key = XXXXX

region = nyc3

endpoint = nyc3.digitaloceanspaces.com

# Hetzner Falkenstein

# will be writing to... notice not public but can make public on Hetzner UI

[hetzner-fsn1]

type = s3

provider = Other

access_key_id = XXXXX

secret_access_key = XXXX

endpoint = fsn1.your-objectstorage.com

region = fsn1

# list bucket davetesting

rclone lsd do-first-project:davetesting

# copy (doesn't delete any extra files at destination)

rclone copy do-first-project:davetesting /mnt/f/Backups/DigitalOcean/davetesting --progress

# make sure destination folder exists (will create automatically)

# 8.6GB test - a single 4GB file in there

# doing 4 files at a time

# maxing out my USB HDD

# it won't copy again if file is already there (looking at time within 2 secs worked for me)

rclone copy do-first-project:autoarchiverfeatures /mnt/f/Backups/DigitalOcean/autoarchiverfeatures --progress --modify-window 2s

# 40k files

# fast-list if 10k+ more files - gets a flat list. Fewer API requests. More RAM usage locally.

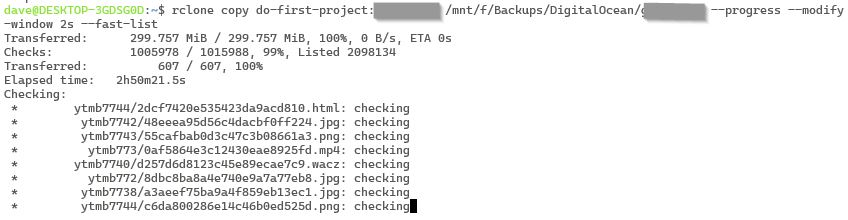

rclone copy do-first-project:aademomain /mnt/f/Backups/DigitalOcean/aademomain --progress --modify-window 2s --fast-list

rclone copy do-first-project:lighthouse-reports /mnt/f/Backups/DigitalOcean/lighthouse-reports --progress --modify-window 2s --fast-list

rclone copy do-first-project-nyc3:carlos-demo /mnt/f/Backups/DigitalOcean/carlos-demo --progress --modify-window 2s --fast-list

rclone copy do-first-project:xxxx-ytbm /mnt/f/Backups/DigitalOcean/xxxx-ytbm --progress --modify-window 2s --fast-list

# I got NoSuchKey errors with fast-list, but fine without (on subsequent checks)

rclone copy do-first-project-nyc3:xxxx /mnt/f/Backups/DigitalOcean/xxxx --progress --modify-window 2s --fast-list

## Hetzner

`fsn1.your-objectstorage.com`

rclone lsd hetzner-fsn1:davetesting

# copy from local to Hetzner

rclone copy /mnt/f/Backups/DigitalOcean/davetesting hetzner-fsn1:davetesting --progress

Google Drive

https://rclone.org/drive/#making-your-own-client-id

rclone config

# gdrive-personal

# 22 is gdrive

rclone ls gdrive-personal:

rclone copy gdrive-personal: /mnt/f/Backups/gdrive-personal --progress --modify-window 2s

Bash script

Conclusion

I’m loving the stability of rclone, and its famous diff checking, so I’m not downloading copies all of the time. Huge repositories of files take time. I’ve got 2 million files which are taking over 3 hours to check.