Web Page Archiving

How to Archive Single URLs: A Guide

Need to archive webpages but are unsure how to go about it?

This guide will walk you through my favourite tools and techniques to ensure your links are archived in the best way possible.

In This Article

- Why Archive? – The importance of preserving webpages.

- General Archival Tools – The most reliable solutions.

- Platform-Specific Archival Tools – What works best for different sites.

- Manual Archival Methods – Including browser extensions.

- Appendix – Additional resources and thoughts.

Key Summary

- Bellingcat/auto-archiver – The most effective solution for comprehensive archiving I know of. Shameless plug: I have a hosted version.

- Wayback Machine – I always submit URLs here (though platforms like X, Instagram, and Facebook may not archive well).

- Archive.ph – Very good and better than auto-archiver or wayback for bypassing paywalls.

- Webrecorder - Good tool for crawling sites

This article was written to explore alternatives to the auto-archiver which I use a lot. I haven’t found a better solution. If you have suggestions or thoughts, please get in touch: davemateer@gmail.com

1. Why Archive?

Before we dive into the methods of archiving single URLs, it is essential to understand why you may be doing it. In our ever-changing online world, online content can disappear (often very quickly from large platforms). This is a big problem and is one of the main reasons we archive.

Who Might Benefit?

I’ve found that archiving is useful for:

- Human Rights Investigative Organisations – This is my own field, where our work has been recognised with an international award which we are very proud of!

- Investigative Journalism

- Insurance Investigations

- Corporate Investigations

- Law Enforcement

- Legal Teams and Firms

- Other Investigative Organisations

- Online Discussion Forums eg Hacker News

- Digital Preservation eg Digital Preservation Coalition or any of their supporters

What Should You Archive?

Depending on your requirements, here are various elements you may wish to preserve:

- Screenshots – To capture the exact visual context of a webpage.

- HTML Versions – Keeping the structure and content accessible even if the original site is altered.

- Images and Videos – Particularly important for evidence, especially in human rights cases.

- Text Content – Valuable context.

- WARC or WACZ Files – Enabling you to replay the entire page and observe changes over time.

- Hashes and Timestamps – Providing proof of an asset’s existence at a specific moment.

The Purpose Behind Archiving

Archiving webpages is crucial for two main reasons:

- Analysis: It allows us to conduct detailed reviews and investigations long after the original content was removed or changed.

- Preservation: It helps maintain a reliable record for articles, reports or legal cases.

For the past four years, I have been involved in human rights archiving, focusing on safeguarding images and videos. While text is valuable, it is the visual evidence that is the most important.

By utilising hashing and publishing on immutable platforms (which we are stopping in favour of timestamping), we can ensure that these records have a strong degree of reliability.

1.1 What to Archive (Input)

Let’s take an example of an X/a Tweet which is important and you want to archive it using the auto-archiver.

Setting Up Your Archive Input

Put the URL into a spreadsheet.

Once the archiving kicks off (usually within a minute), the archiver processes these URLs and writes the results directly back into the spreadsheet:

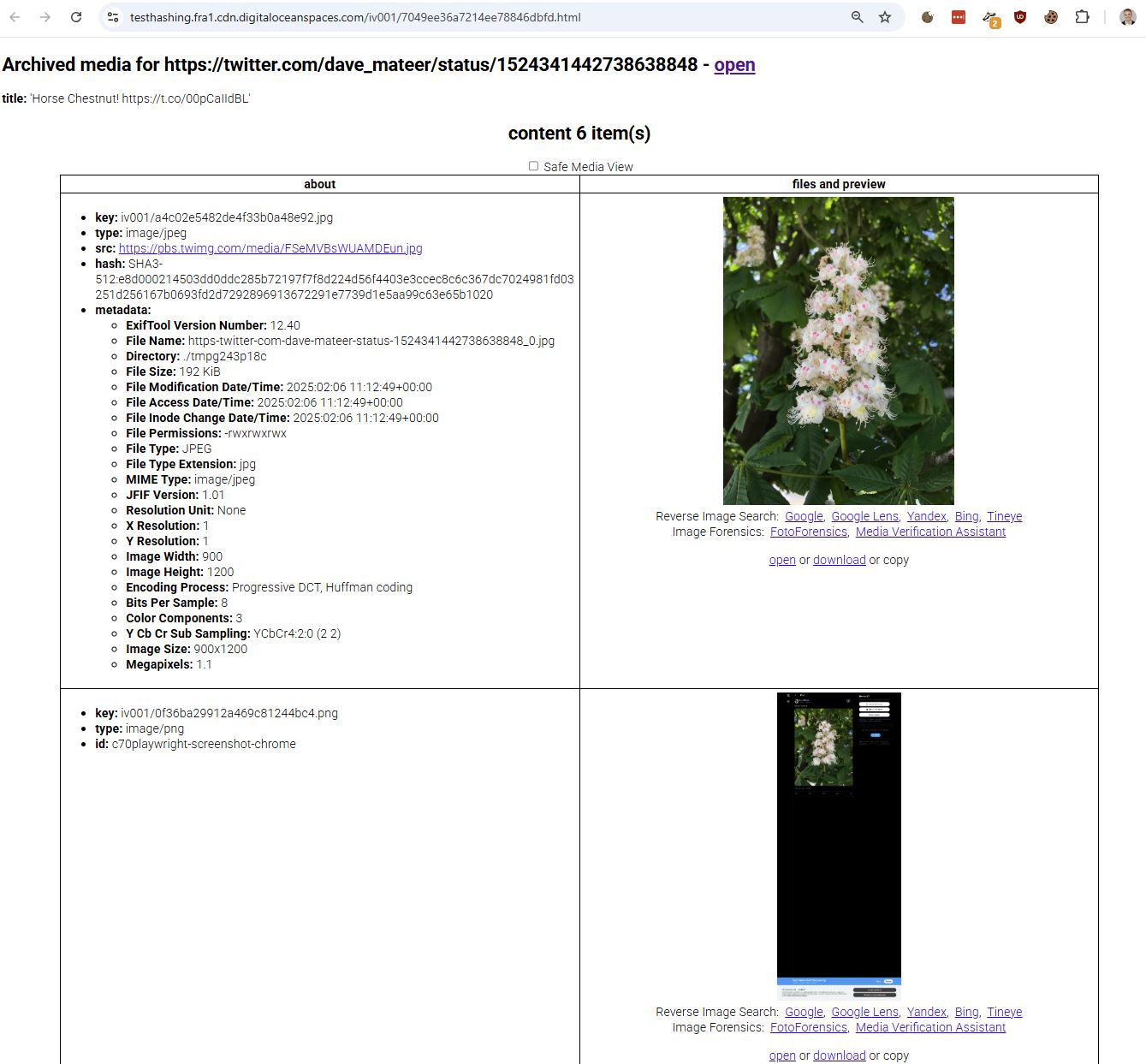

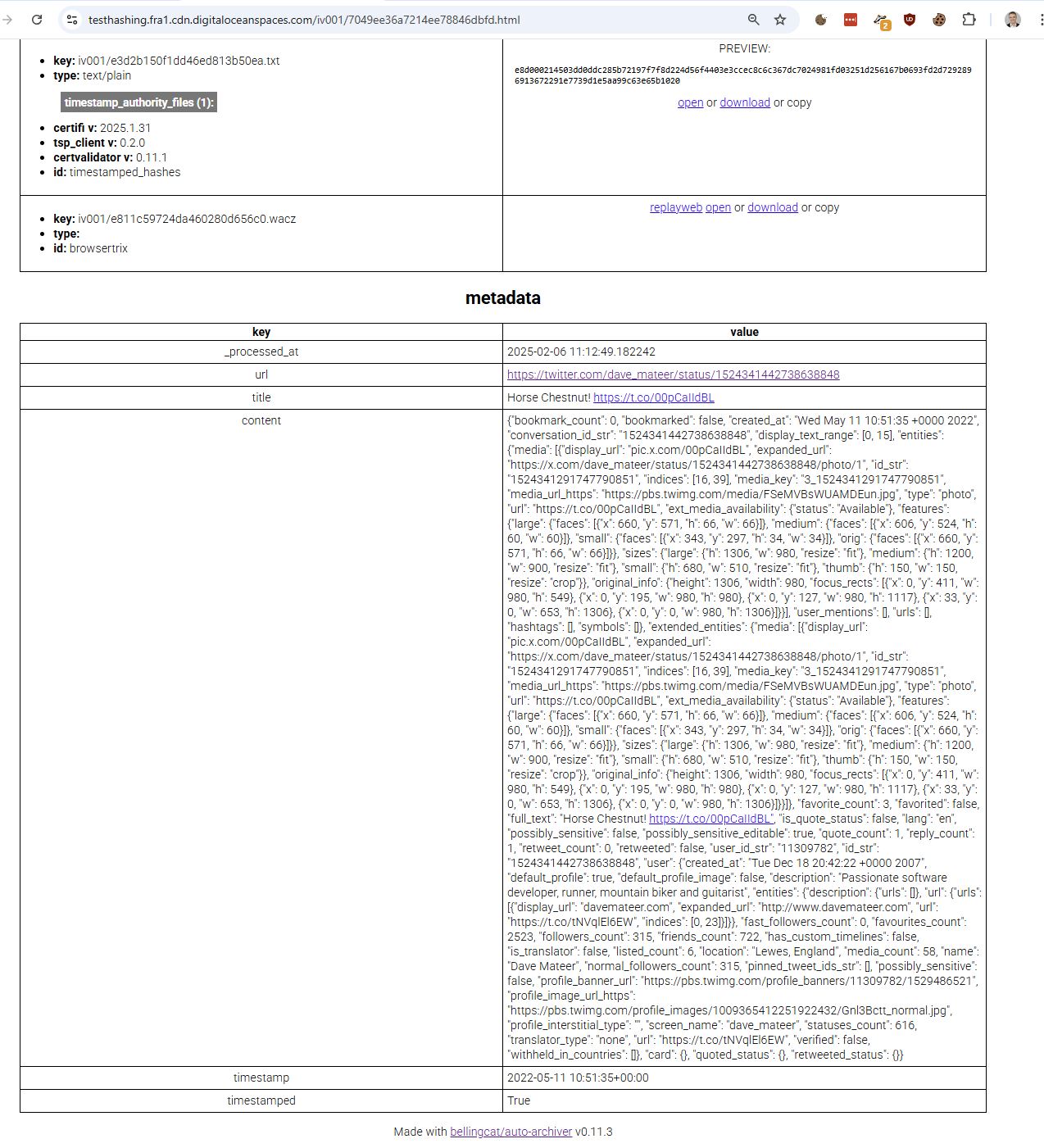

Exploring the Archive Details

Every archived page includes a details page, which you can access via a direct link. On this page, you’ll find:

- Full Resolution Images: Complete with EXIF metadata and hashes

- Screenshots: A visual capture of the page at the time of archiving.

- Reverse Image Search Links: Helping you track the image’s origins.

- A timestamp hash: Which proves the content at this moment in time.

Take a look at this snapshot:

Moreover, the archive also stores a timestamped hash of the file, a WACZ archive of the entire page, and the full text content of the Tweet:

Why Google Spreadsheets?

I’ve found that Google Spreadsheets is flexible and free, and fantastic for collaboration. I worked on sheets with 70,000+ rows (per tab) and collaborating with over 10 people at once. It can use significant resources on my machine, and can slow down, but is extremely impressive.

Curated Test Links for Archiving

During my research, I compiled a list of test links that covers various scenarios—ranging from pages with numerous images to those with extensive content. You can check out the Google Spreadsheet of Test Links to see these examples in action. If you have any interesting links you’d like to have archived, feel free to drop me a line at davemateer@gmail.com.

Final Thoughts on inputs

It quickly became apparent that archiving large platforms—where most of my work is concentrated—often demands specialised, targeted archivers. That is why the auto-archiver worked well (as it has plugins for each platform).

1.3 How to Store Archives (Output)

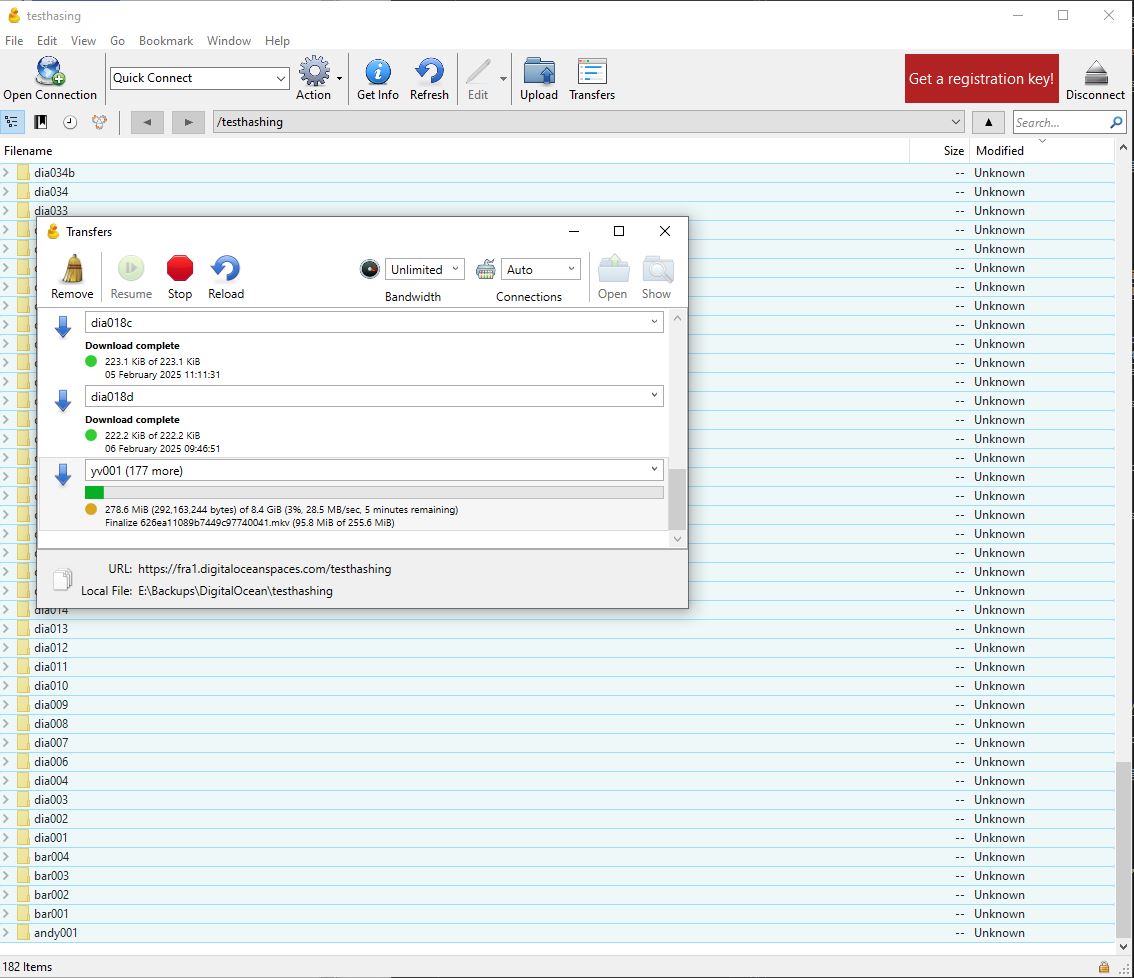

When it comes to storing your archives, the best option depends on your organisation’s needs and the infrastructure you already have in place. Here are some common storage solutions I work with:

- S3 Storage: For instance, DigitalOcean Spaces.

- Google Workspace/Drive: Google Drive.

- Local/Network Storage: Use your own servers or network storage.

DigitalOcean Spaces

DigitalOcean Spaces is particularly effective in public mode. It can host any file and make it viewable online. For example, check out this Instagram auto-archiver output. Additionally, you can set up a CORS policy to host WACZ files and use them with ReplayWeb for viewing the captured page.

Google Drive

Google Drive offers excellent control over files within your domain. However, it’s important to note that while it’s great for file management, you cannot serve a webpage directly from it / use it for direct image linking.

Additional Tools

For those managing S3 storage, Cyberduck S3 Viewer is a great tool to view and copy files to your S3 buckets

2. General Archival Tools

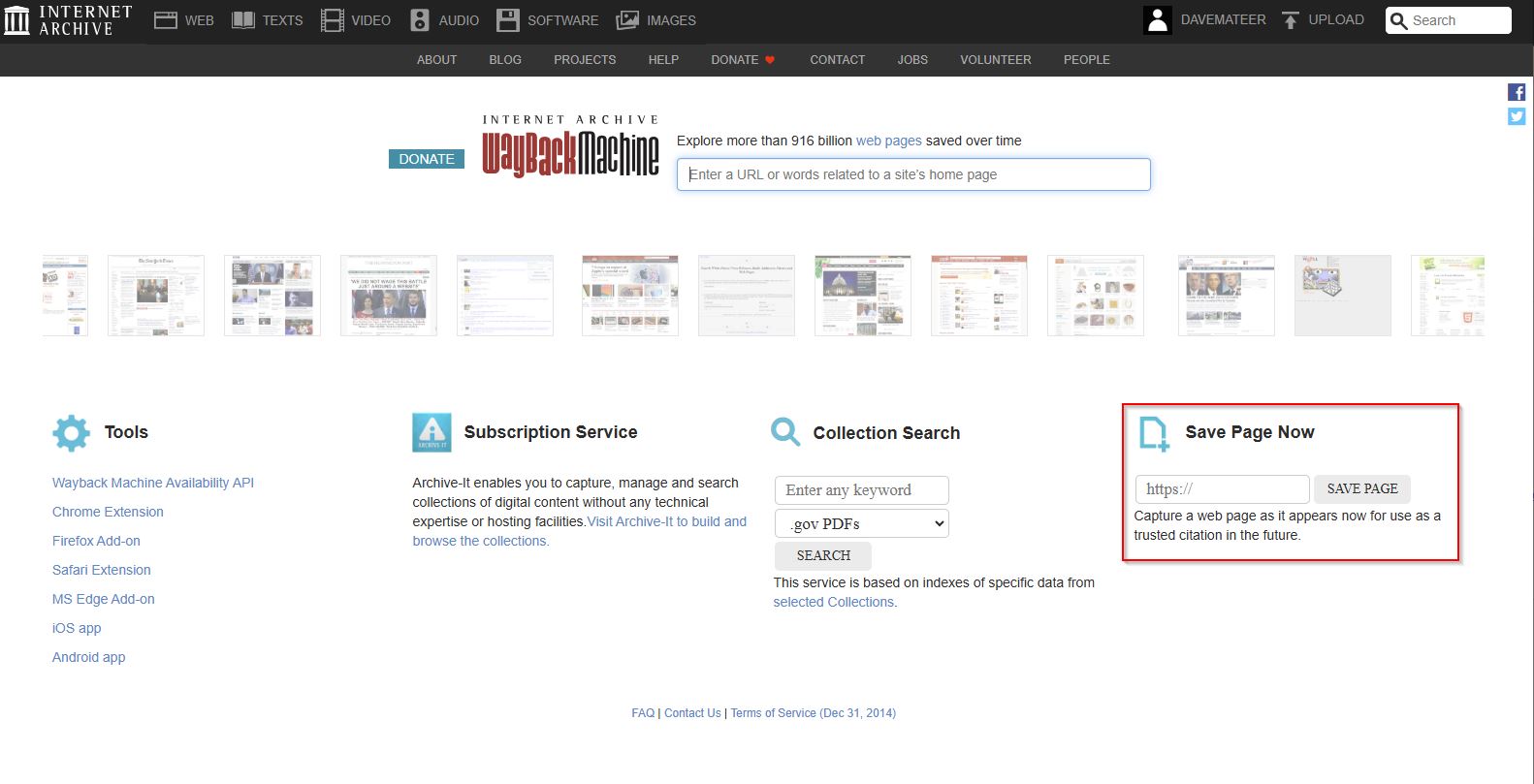

2.1 Wayback Machine

The Wayback Machine from the Internet Archive or simply web.archive.org, is the most popular free archival tool available today.

I always submit my archived urls here—often through their API. This invaluable service, provided by the non-profit Internet Archive, has helped preserve hundreds of billions of pages over the last 23 years.

However, it’s important to note that the Wayback Machine doesn’t work well with larger platforms like Instagram (currently blocked) and Facebook. Your mileage may vary.

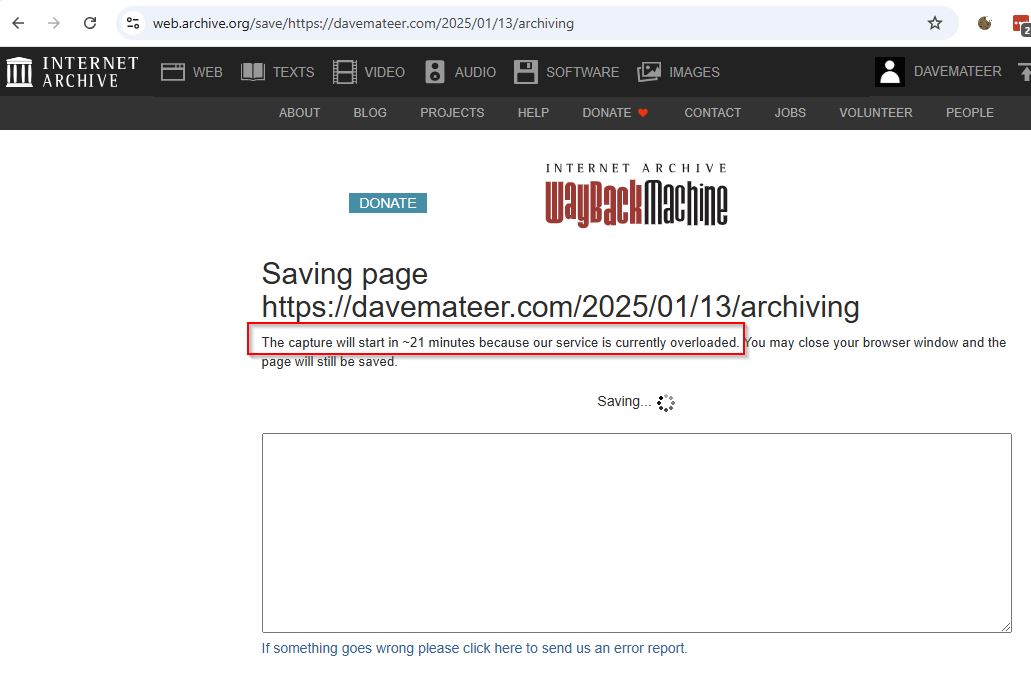

Keep in mind that the service can sometimes get overloaded. The API, in particular, might take up to 5 minutes to confirm a successful save and you can see above a 21 minute wait from the web UI.

2.2 Auto-archiver

The auto-archiver is a great knitting together of the best open source tools (which is continually evolving) by the amazing team at Bellingcat. I’ve been using and contributing to this project for the past three years, and I even offer it as a hosted service.

This suite of tools is designed to help you archive content from several specific platforms, including:

- X/Twitter

- Telegram

- TikTok

- YouTube

- VK

Downsides

While the auto-archiver is the best at what it does, there are some challenges to keep in mind:

- Complex Setup: It can be hard to configure properly (currently being worked on - Feb 2025)

- No UI: There isn’t a user-friendly interface available.

- Limited User Base: It doesn’t have a massive following yet.

- Constant Tweaking: It requires ongoing adjustments to keep pace with platform changes.

Upsides

Despite these challenges, the auto-archiver offers several significant advantages:

- Unmatched Raw Results: There is nothing better for capturing pure archival data.

- Commercial Support: You can get direct support and even speak with the source contributors (including me!).

- Proven Stability: It has been running reliably for over four years across various platforms (AWS, Azure, and bare metal).

- Python: It is written in Python, so can be easily understood, and worked on by others.

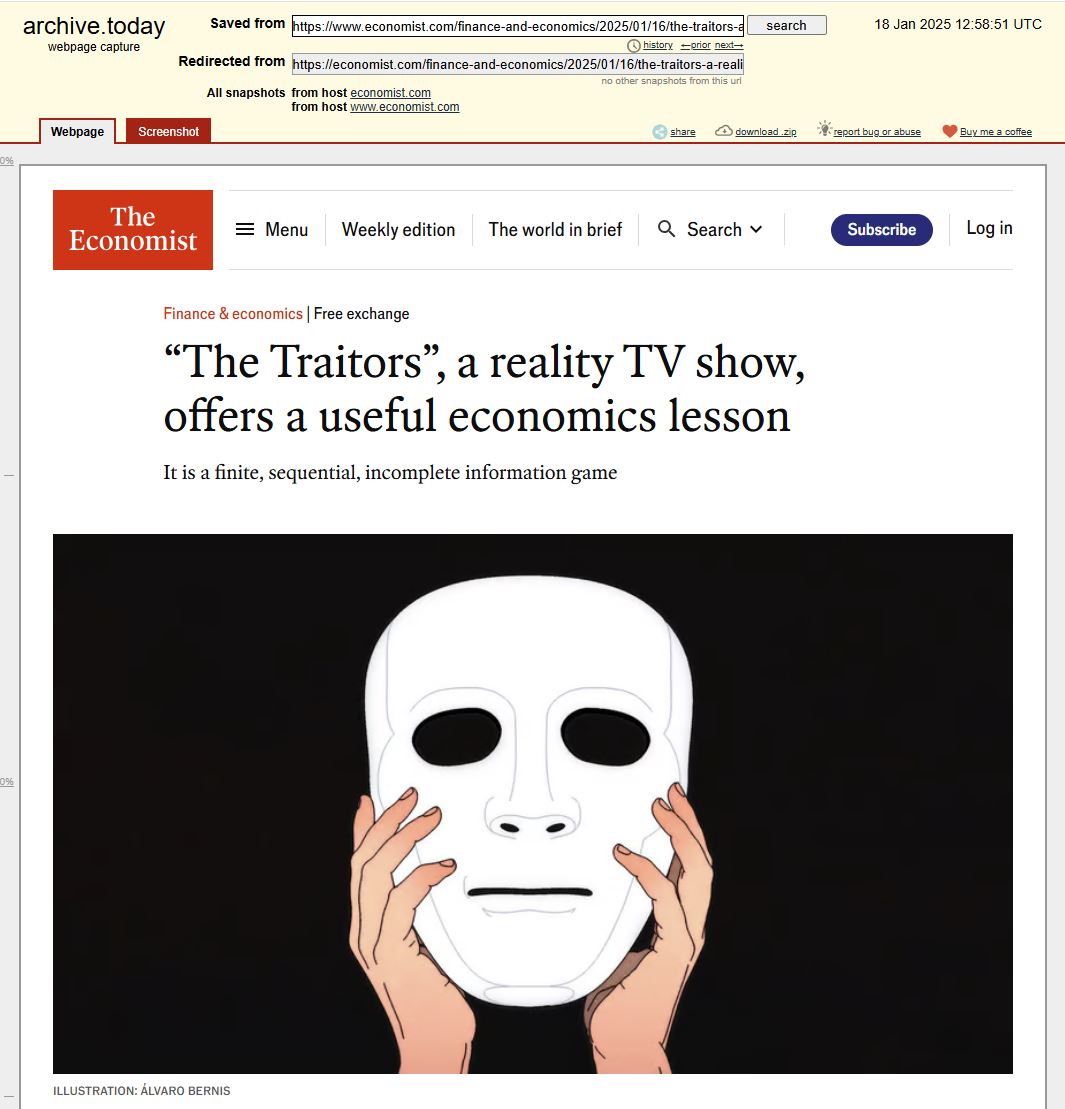

2.3 Archive.today

archive.today is known by several names, including:

All these URLs redirect to the same service.

This service is particularly effective at getting around paywalls—for example, take a look at this archived page. I’ve found nothing better for accessing paywalled content, which is why Hacker News often links to articles saved via Archive.today. If you ever run into issues—such as the site not loading or seeing a “Welcome to nginx” page—try deleting the site cookie in Chrome.

For more information, you can check out their FAQ. It’s worth noting that the identity of the site’s owner is kept under wraps, likely due to the controversial nature of bypassing paywalls and potential breaches of EULAs.

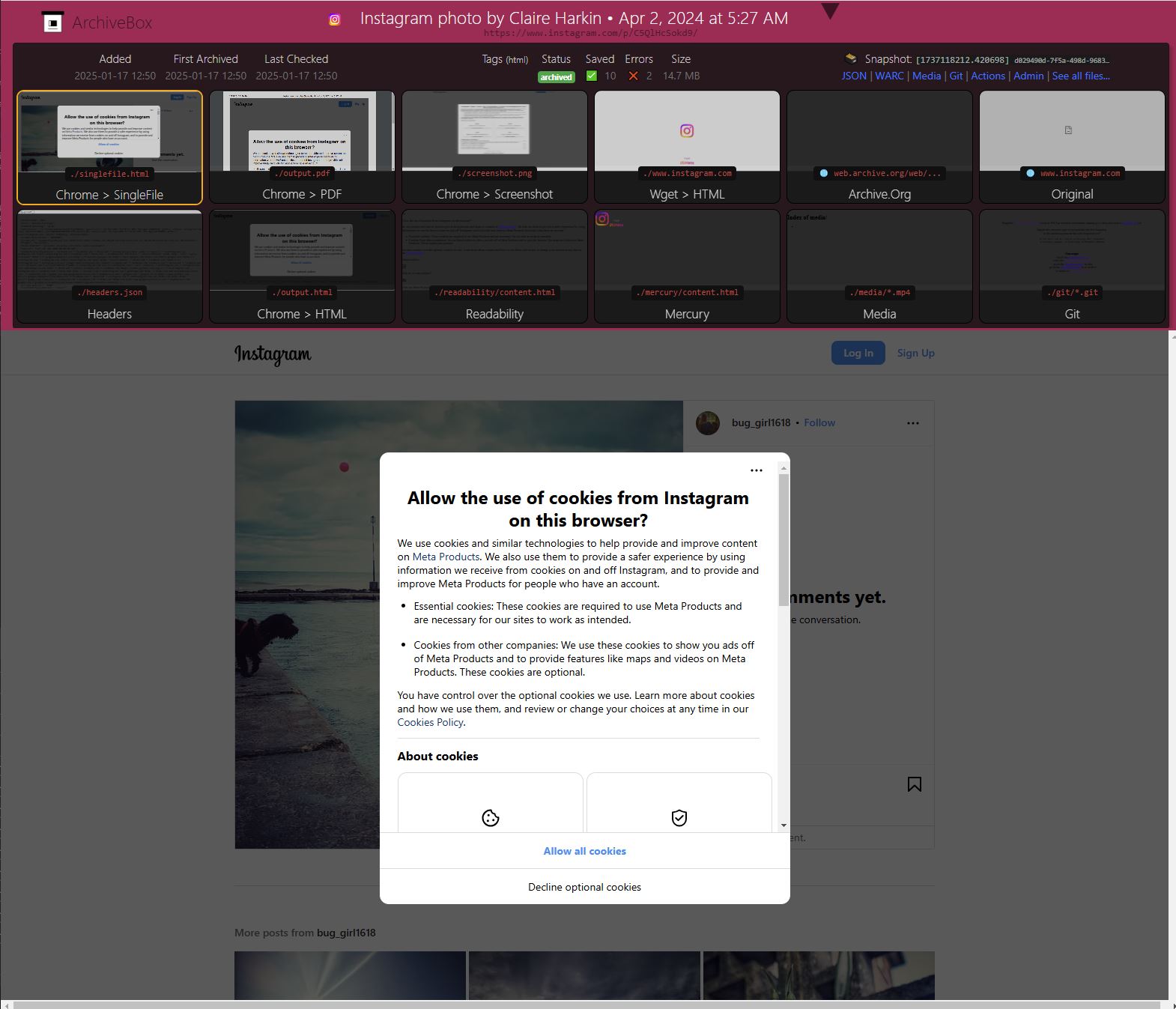

2.4 Open Source

These tools are interesting, but not as useful for my specific needs—I prefer the auto-archiver described above. Nevertheless, here are some noteworthy open source archiving projects:

Screenshot from Archivebox - notice the common problem of popups on large platforms

-

ArchiveBox

A fully featured self-hosted solution with a dedicated website. With 23k stars, it’s similar to the auto-archiver (with a nice front end) but less specialised, and it’s excellent for self-hosting. -

Webrecorder

A Chrome extension that saves pages as WARC/WACZ files. This project is by Ilya Kreymer, the same person behind the great Browsertrix Crawler (702 stars), which we use with the auto-archiver to save WACZ files. See the end of this article for more on this. -

Heritrix 3

The Internet Archive’s web crawler project with 2.9k stars and 45 contributors. Its output is largely similar to what the Wayback Machine produces. -

Monolith

A Rust-based tool with 12.4k stars and 28 contributors that embeds CSS, images, and JavaScript assets into a single HTML file—ideal for general archiving cases. -

Scoop

A new project focused on provenance. https://github.com/bellingcat/auto-archiver/issues/248 potential though to use it instead of browsertrix-crawler. -

Perma.cc

Widely used by academics, legal professionals, and libraries. It costs $10 per month for 10 links or $100 for 500 links. This service is built by the Harvard Library Innovation Lab in collaboration with Ilya. -

Conifer

Note: I’ve already submitted a bug report (as of January 29, 2025).

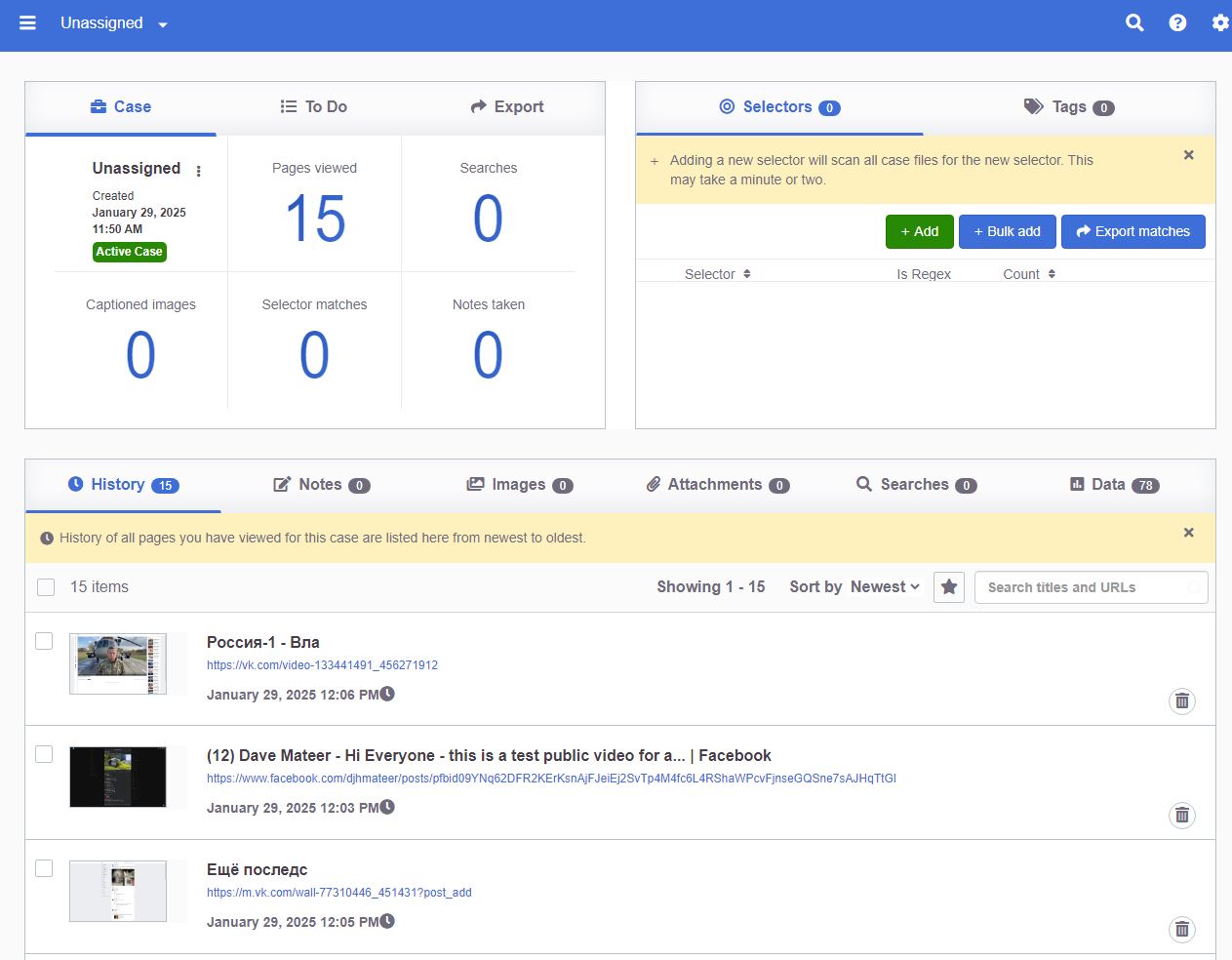

Commercial Archiving Tools

Surprisingly, there aren’t many commercial tools that can archive single pages as effectively as the open source options. However, some case/investigation management systems include basic archiving tools:

-

Atlos

This platform includes a basic screenshot tool and even integrates with the auto-archiver.

-

Hunch.ly

An OSINT tool designed for online investigations, available as both an app and an extension. It automatically captures content during your investigative process. $110 per year.

So far, I haven’t encountered any enterprise archiving tools that meet the needs of single-page investigative archiving as effectively as these solutions.

2.5.1 PageFreezer

PageFreezer is a Vancouver-based company with around 50-100 employees. I chatted to Doug, who is incredibly friendly and helpful showing me how their products work.

PageFreezer specializes in capturing changes to web pages over time and provides a user-friendly interface to view these changes, similar to the Wayback Machine.

They offer two main products:

- PageFreezer: Focuses on tracking and visualizing changes to web pages over time. This crawls.

- WebPreserver: An automated tool for preserving social media and web evidence. It’s a chromium browser plugin that requires a subscription and allows exports in OCR PDF, MHTML, or WARC formats. Bulk capture on Facebook, Instagram, TikTok (all on date ranges)

Key Features:

-

Social Media Preservation:

- Facebook: Bulk capture capabilities including all posts on a timeline.

- Twitter:

- LinkedIn:

- YouTube:

- TikTok

- Rumble

- TruthSocial

- BlueSky

- Threads

PageFreezer primarily caters to law enforcement agencies, government agencies, legal firms, and investigators.

Pricing is around the $3350per year for a single user.

2.5.2 MirrorWeb

MirrorWeb is a focusses on large-scale website archiving rather than single-page preservation. They have large clients like:

2.5.3 Archive-it

Archive-it is a subscription service built by the Internet Archive (see Wikipedia for more details). It is designed for archiving entire websites over time, capturing their evolution and preserving historical snapshots.

Other Tools

Here are a couple of additional tools worth mentioning, though they cater to more specific use cases:

-

CivicPlus Social Media Archiving:

This tool focuses on government social media archiving and compliance. It’s designed to help government agencies back up and manage their social media content to meet regulatory requirements. -

Smarsh:

Smarsh specializes in company communications governance and archiving. It’s a comprehensive solution for businesses that need to archive and manage communications (e.g., emails, chats, and social media) for compliance, legal, or regulatory purposes.

3. Platform-Specific Archival Tools and Libraries

For archiving content from specific platforms, there are several excellent libraries available. The auto-archiver leverages many of these tools.

Instagram archiving can be divided into two categories: public accounts and private accounts.

-

Public Accounts:

Example: Farmers Guardian

These accounts are accessible to anyone without requiring permission. -

Private Accounts:

Example: Dave Mateer

Access to these accounts requires approval from the account owner.

In the auto-archiver, we use HikerAPI to retrieve data from public Instagram accounts.

Instagram Private - Instagrapi

For private Instagram accounts, we rely on Instagrapi, a library developed by the creators of HikerAPI. With 4.6k stars on GitHub, it’s a robust tool for accessing private Instagram data.

- Documentation: Instagrapi Docs

- Usage: The documentation provides excellent tips and guidance. I’ve also developed a proof-of-concept (POC) for integrating Instagrapi into the auto-archiver.

For Facebook, I created a custom integration in the auto-archiver specifically designed to archive images. This functionality ensures that images from Facebook can be preserved.

Telegram

The Auto-archiver now features seamless integration with Telegram, utilising the Telethon library. You can explore the implementation here.

X / Twitter

As of January 2025, yt-dlp is a fantastic tool for archiving content from X (formerly Twitter). For an extra layer of reliability, the paid API serves as a backup.

Videos (e.g. YouTube, TikTok)

When it comes to video archiving, yt-dlp is the standard tool.

VK

The Auto-archiver also integrates with VK through the VK URL Scraper

Screenshots

For capturing screenshots, Playwright is superb. I typically run it in headful mode (rather than headless, as the latter is often easily detected) to obtain more reliable captures. On my Linux servers, I utilise xvfb-run to operate within a virtual framebuffer.

For a professional solution, urlbox.com offers a top-tier screenshotting service.

4. Manually Archiving

When it comes to manually archiving content start simple and see what works for you.

Images

- Chrome Save as PDF: This feature works well, especially for capturing long, scrolling pages.

- Chrome Save as MHTML: This option is also effective. Once the page is saved as MHTML, you can right-click to save any images you need.

-

Screenshots:

- On a PC, Greenshot is an excellent free tool.

- On a Mac, simply use the shortcut

Cmd + Shift + 5or other variants for capturing screenshots.

- Image Save As: When available, you can right-click an image to save it directly, or use the F12 developer tools to locate and extract the image source.

Videos

- YouTube and Similar Platforms: Note that while gb.savefrom.net is currently blocked from the UK, yt1d.com is operational and can be used to save non copyrighted videos.

Chromium Extensions

These extensions submit to archiving services:

- Archive Page (archive.ph): This Chrome extension, with 90k users, a 4.4-star rating, and 123 reviews, simply submits pages to archive.ph.

- Wayback Machine: This extension allows you to submit pages directly to the Wayback Machine

These extensions attempt to save the web page themselves:

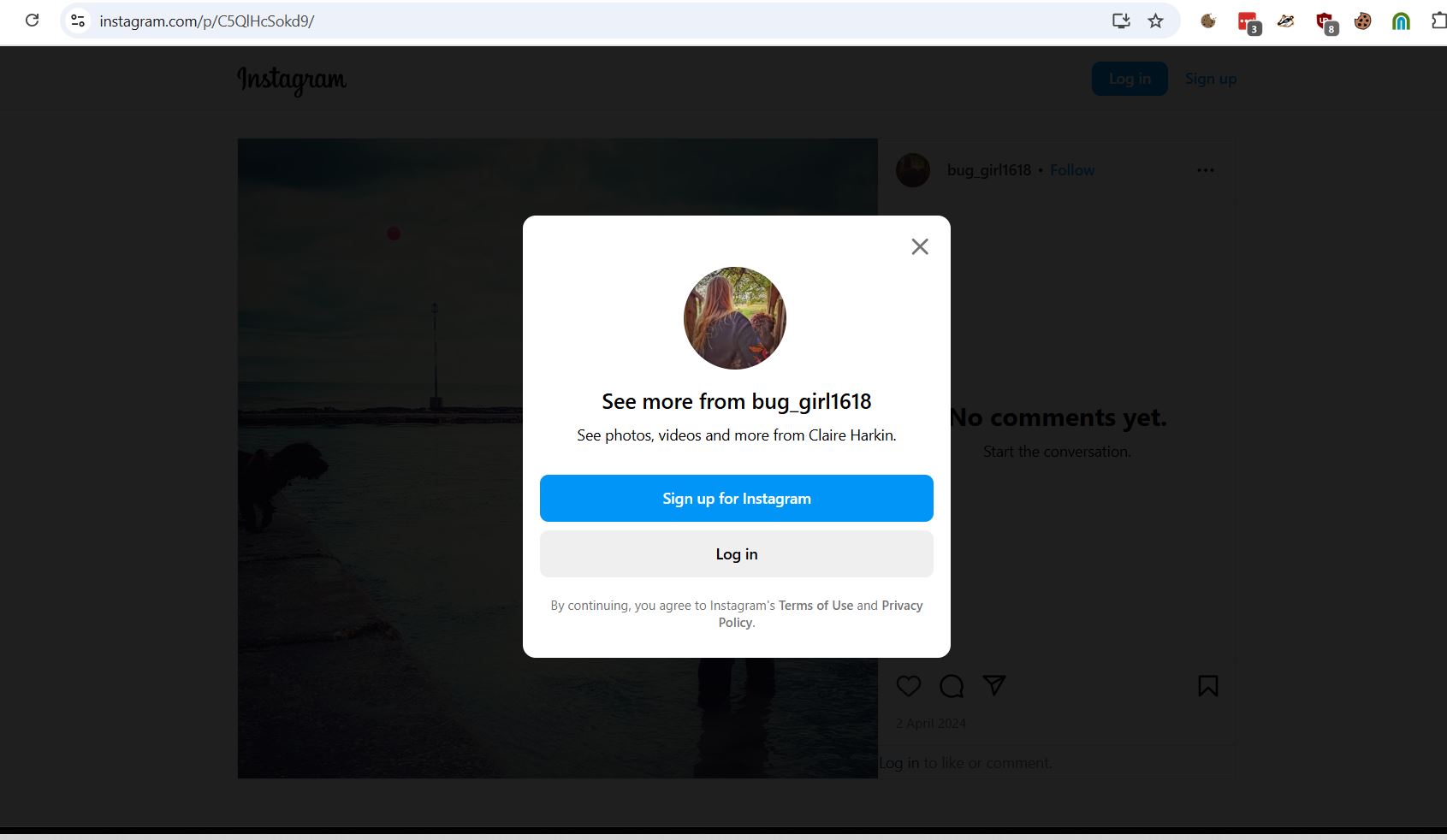

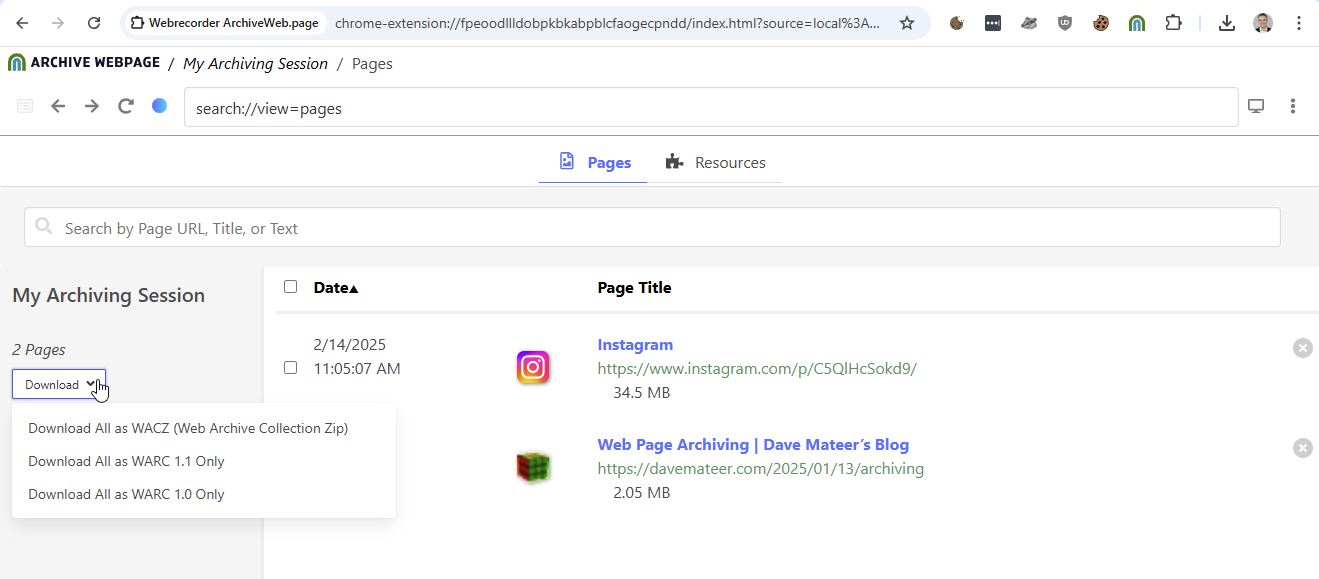

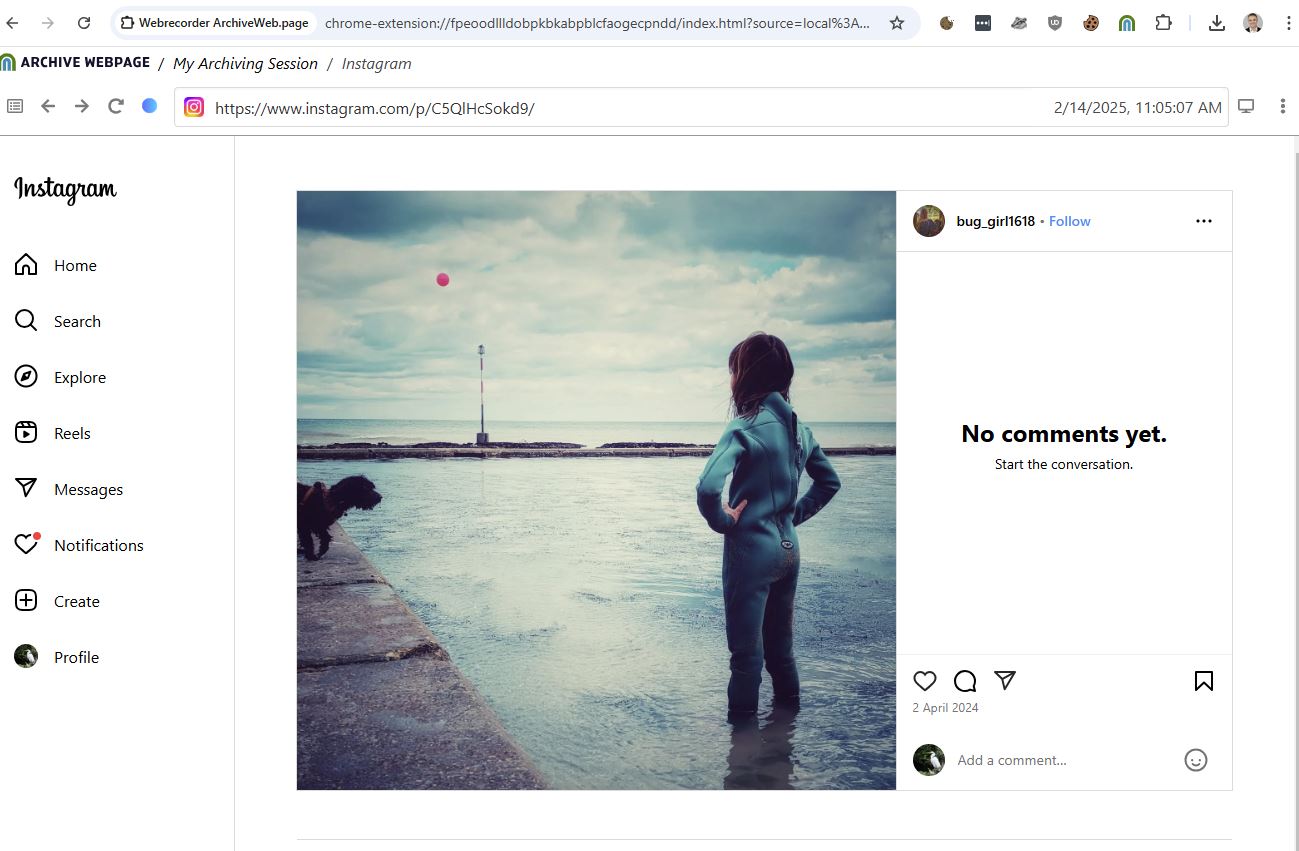

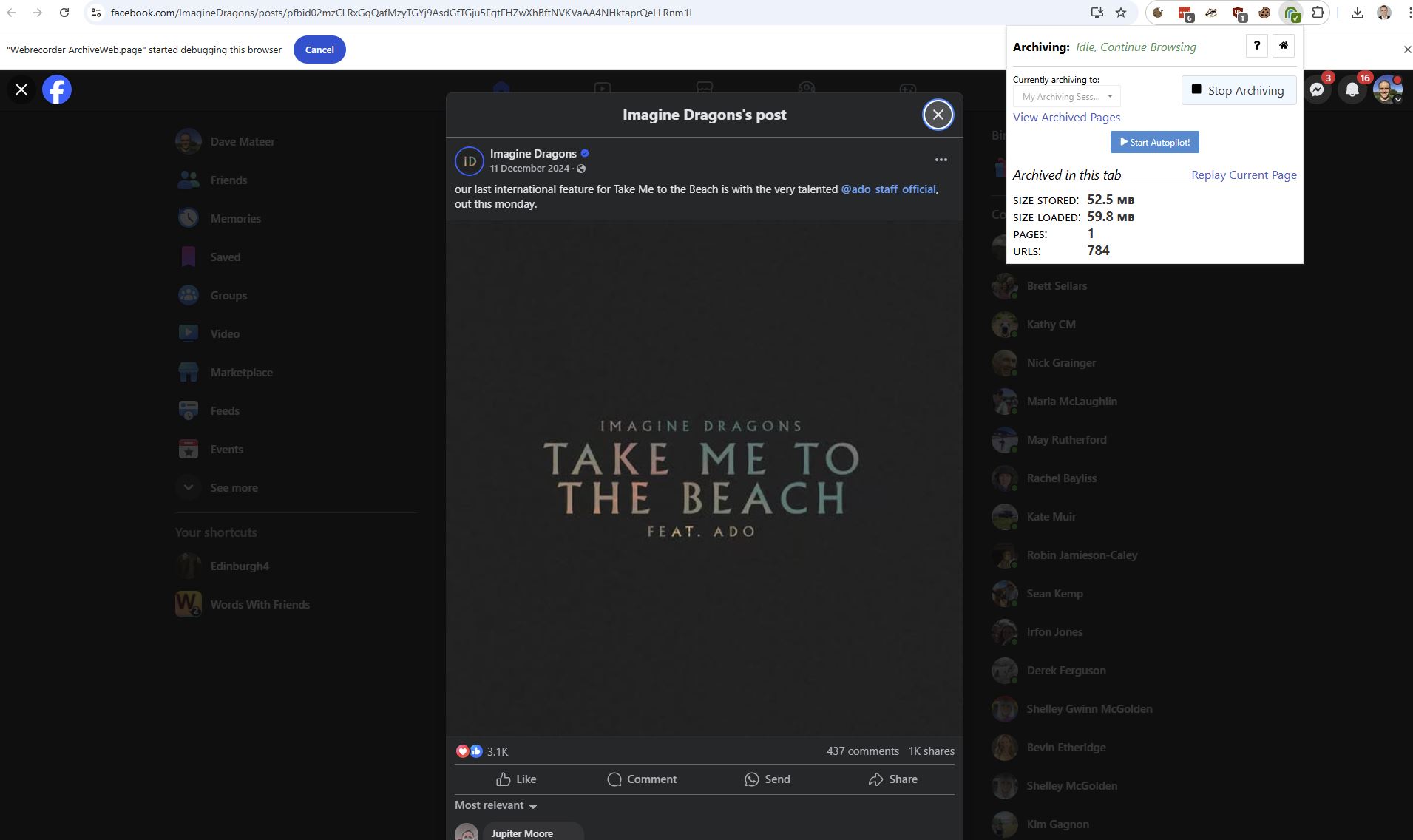

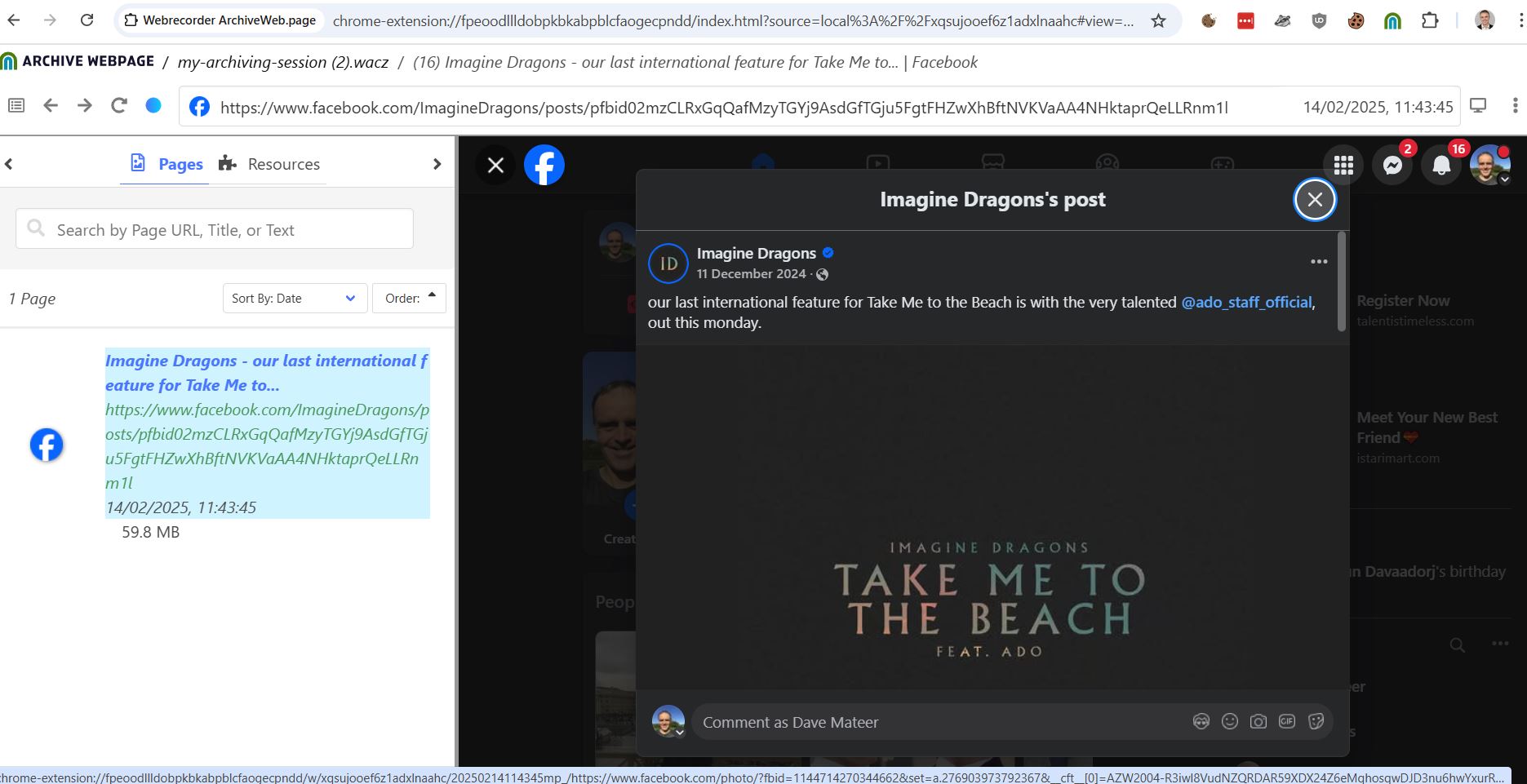

- Webrecorder Archive Webpage: This extension archives webpages as a WACZ files or WARC.

Archive Webpage dashboard. This is offline using browser local storage to view the saved session.

Archive Webpage dashboard. This is offline using browser local storage to view the saved session.

Again the machine is offline viewing this.

Again the machine is offline viewing this.

**Here is another computer, not logged into Instagram

Here is that same computer viewing the wacz of the logged in the machine.

ArchiveWeb.page allows users to archive what they browse, storing captured data directly in the browser. Users can download this data as files to their hard drive. Users can also delete any and all archived data at any time. ArchiveWeb.page does not collect any usage or tracking data

{}

Facebook logged in capturing the page.

{}

Facebook logged in capturing the page.

Downloaded and uploaded wacz to different machine and got a good render. The infinite scroll comments do not work properly.

Downloaded and uploaded wacz to different machine and got a good render. The infinite scroll comments do not work properly.

I’m excited about this tool as it gives the great feature of being logged into sites, and saving exactly what the users sees.

- SingleFile This extension 200k users, saves the complete webpage as a singlefile, changing the html to embed assets.

5. Appendix

This article is an opinionated list and I wont mention the tools that are not useful for me! I am not looking at multi page web crawling nor investigation/evidence/case management systems - which archiving is often a part of.

awesome-web-archiving contains more with a much broader scope.

WARC and WACZ file formats

The WACZ format is a more recent development, designed to simplify the sharing and replay of web archives. It is essentially a zipped collection of WARC files, along with additional metadata and an index to facilitate easier access and replay of the archived content.

Web ARChive files.

https://wiki.archiveteam.org/index.php/The_WARC_Ecosystem

Both file formats can be viewed on replayweb.page

Replay web is somewhat confusing (when I started). But with testing I found that it really does work offline, viewing well what was captured at the time the wacz/warc was captured.

MHTML

MIME HTML - essentially a webpage single page archive in a single file which can be very useful. Chromium browsers can do this manually using a right click.

https://davemateer.com/assets/Instagram.mhtml shows Instagram being archived well.

In comparison to the SinglePage open source projects which rewrites the page assets into a single file.

Hashing

A hash serves as a unique identifier for a file—a single value that confirms the file’s integrity. We use hashes to ensure that images remain unchanged and to verify that our HTML pages (which incorporate individual hashes, effectively a hash of hashes) have not been tampered with.

Before proceeding, it’s worth reflecting on why we archive content in the first place.

(The following insights come from a colleague in the legal sector)

If the objective is to support future legal proceedings, there is an argument that private retention of data may be more than sufficient: “I feel these kinds of systems overcomplicate an already reliable process, one that is accepted by courts around the world for the safe management of digital evidence.

The courts are pragmatic; if you can confidently state that you performed a particular action at a specific time, resulting in the production of certain data, the risk of a two-year sentence for contempt of court is more than enough to deter any manipulation.

Adding further complexity doesn’t necessarily build trust—it can instead hinder the ability of investigators, juries, or the judiciary to understand the processes and their purposes.”

Options for Hashing

But where can you store these hashes in a secure and immutable manner—that is, ensuring they cannot be altered after creation? Consider the following options:

- Blockchains

- Immutable Blog Services (e.g. X/Twitter)

- Timestamping Services

- Write Once, Read Many (WORM) Storage

- Git with Cryptographic Signing (Internal Use)

- Secure Databases with Write-Once Constraints

- Physical Print / Cold Storage

Although Twitter has been a reliable option in the past, it is increasingly falling out of favour both politically and in terms of trustworthiness. Accounts can be blocked and tweets removed, which may undermine the evidence.

Timestamping

Timestamping is a method that can prove a file existed at a specific moment in time, which is invaluable for legal purposes—such as confirming that a file has not been altered after a given date.

One approach is to use external timestamp authorities that adhere to RFC3161, as illustrated by the auto-archiver implementation.

In summary, while a hash confirms that a file remains unaltered, timestamping provides a reliable record of when the file existed. It essentially builds upon the hash by incorporating the crucial element of time—a feature that is especially valuable in legal, archival, or compliance contexts.

Technical details on verifying timestamps

OpenTimestamps

The timestamping method described above relies on the authority of various trusted root entities.

OpenTimestamps is a protocol designed for timestamping files and proving their integrity over time. It embeds the hash onto a blockchain—Bitcoin has been operational for 16 years, and OpenTimestamps has been around for 8 years.

The Berkeley Protocol

international protocol outlining the minimum professional standards for the identification, collection, preservation, verification, and analysis of digital open source information, with an aim toward improving its effective use in international criminal, humanitarian and human rights investigations.

A TODO next is how the AA hits the collection criteria… and do other ways of archiving do it any better?

People and Interesting Articles

- Andrew Jackson – Technical Architect at the Digital Preservation Coalition.

- Archiving Social Media with Browsertrix

- Archivists Saving Work from data.gov

- bag-nabit – Stamps with a certificate so that people can use rclone.

- BitIt protocol

- Scoop – Outputs to WACZ and WARC.

Conclusion

I run a business called Auto-Archiver.com, which offers hosted versions of the Bellingcat Auto-Archiver tool. As an entrepreneur I’m asking

- Are there better products out there? (Not that I know of! Although ArchiveWeb.page is a great tool I want to explore more)

- Are my customers getting their money’s worth? (I believe so)

- Should I partner with other organisations to sell their products? (undecided yet)

- Who needs archiving help? (Investigators, researchers, and organisations dealing with sensitive or time-sensitive data.)

This post is a reflection of my journey in the world of archiving, the tools I’ve encountered, and the questions I’m asking as I grow my business and how I’m helping others.

If you’re passionate about digital preservation, need advice, or have insights please get in touch at davemateer@gmail.com