Azure Functions to Count Downloads from Blob Storage

We use Azure Blob Storage to host .mp4 videos for a client and wanted to have an accurate download count on a per video basis. Here was my initial question on Stack Overflow

I am using a strategy from Chris Johnson and his source here

- Azure Functions timer every 5 minutes to copy $logs files to a new container called \showlogs

- Azure Functions blob watcher on the \showlogs folder that parses the data and inserts into a MSSQL db.

All code show below is in my AzureFunctionBlobDownloadCount solution.

What is Azure Functions?

From Azure Functions documentation:

Azure Functions is a serverless compute service that enables you to run code on-demand without having to explicitly provision or manage infrastructure.

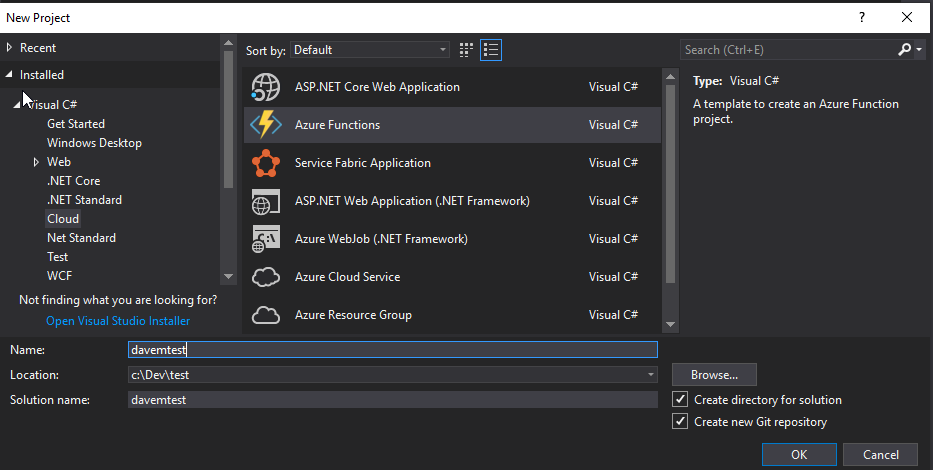

Let’s create an Azure Function locally in Visual Studio, New Project and create the simplest thing.

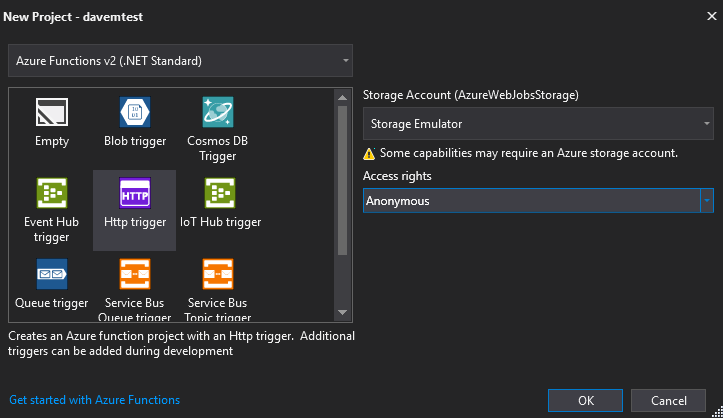

then

Functions v2 (.NET Standard) is where new feature work and improvements are being made so that is what we are using.

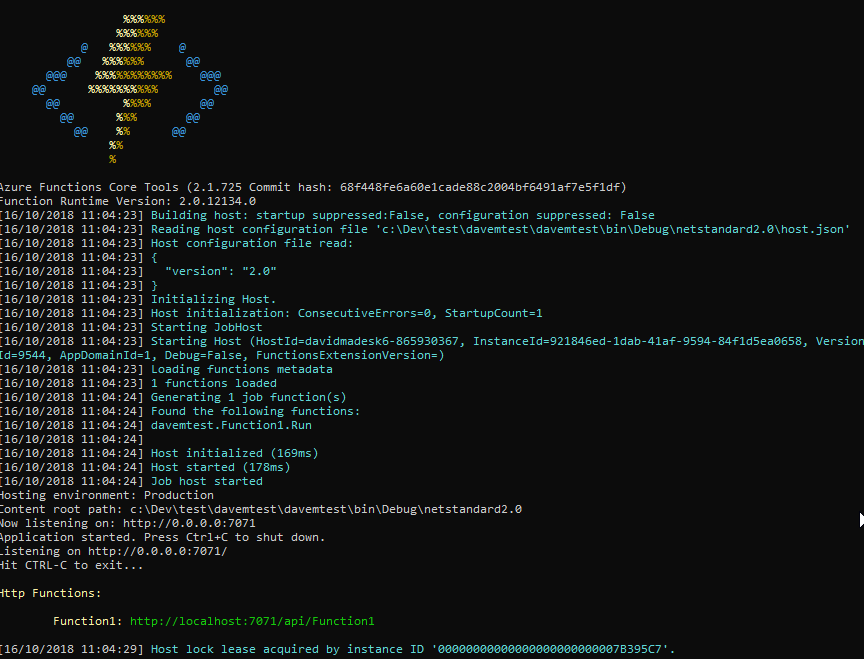

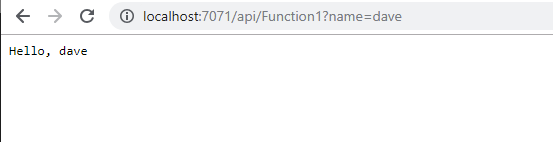

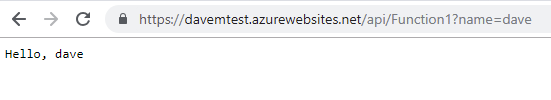

And if we go to http://localhost:7071/api/Function1?name=dave we’ll get back

We have created an Azure Function locally which responds to an HTTP Request

Deploy to Live

Let’s deploy this to Azure

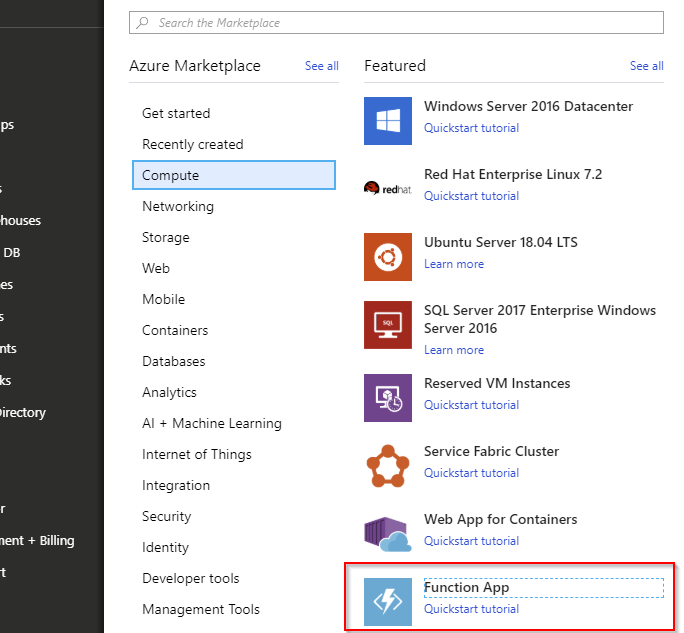

Create a new function from the Azure portal

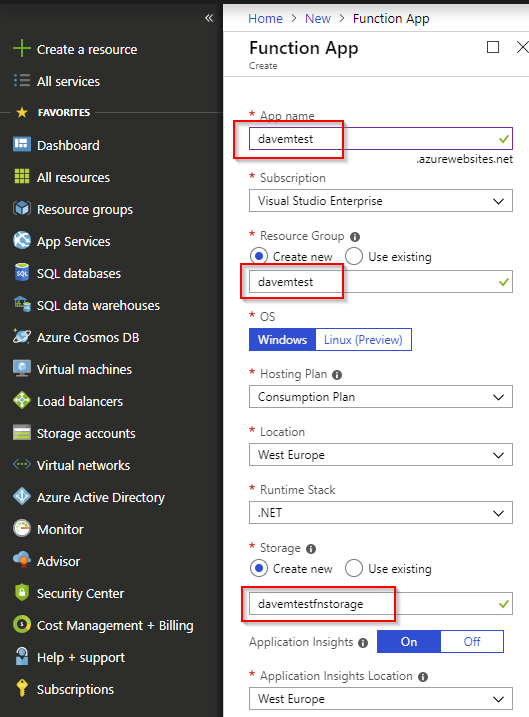

Name the function, RG and Storage

These assets are created

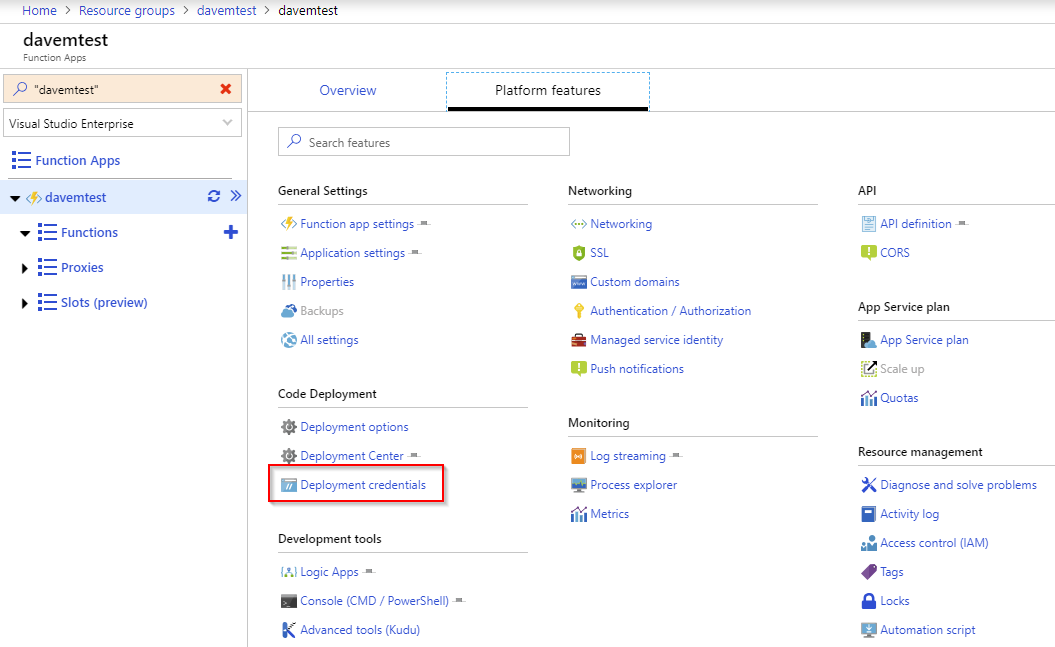

Inside the newly created function, let’s setup how to deploy to it.

Deploy using Local Git

For local development I find using Local Git deployment is excellent. Use Deployment Center to setup then:

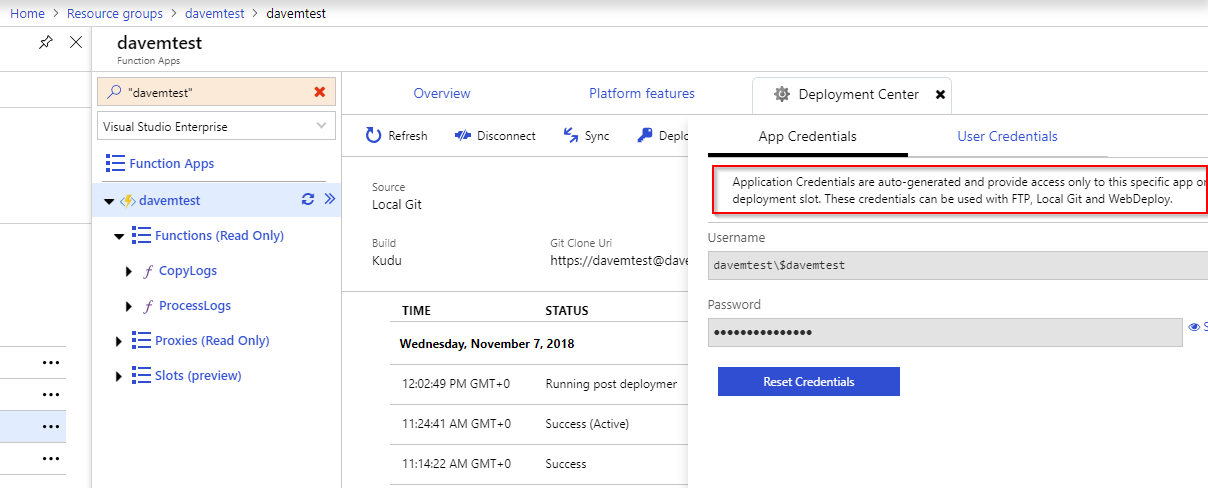

Use the App Credentials so they are specific to this app only. User credentials are across your entire Azure estate.

git remote add azure https://$davemtest@davemtest.scm.azurewebsites.net:443/davemtest.git

git push -u azure master

Setting up deployment from the command line. Use -u (–set-upstream) means that you can simply do git push and not specify which remote.

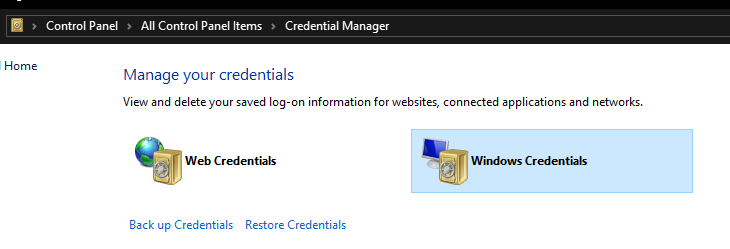

Sometimes you’ll get a badly cached password - this is where you delete it.

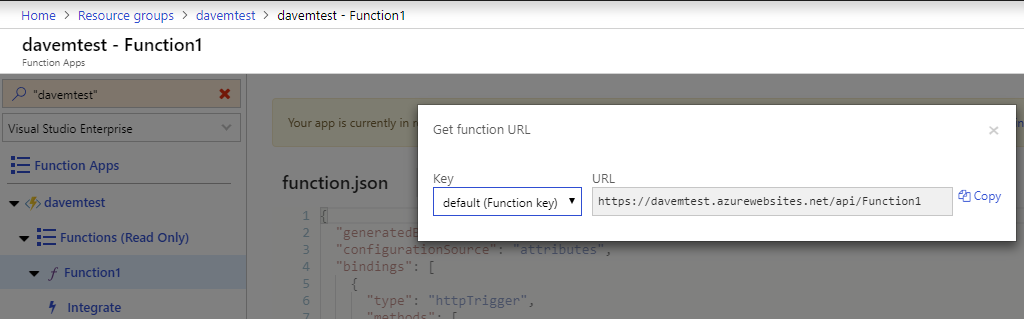

If all has gone well the function will deploy. So now, grab the URL

Testing the function - it worked!

Blob Storage

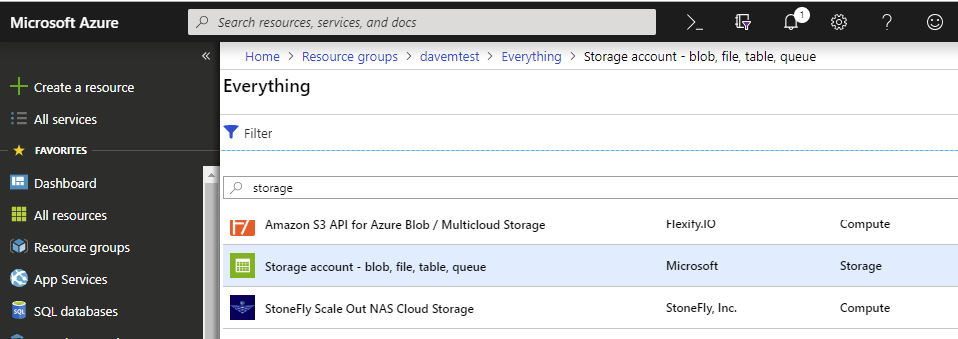

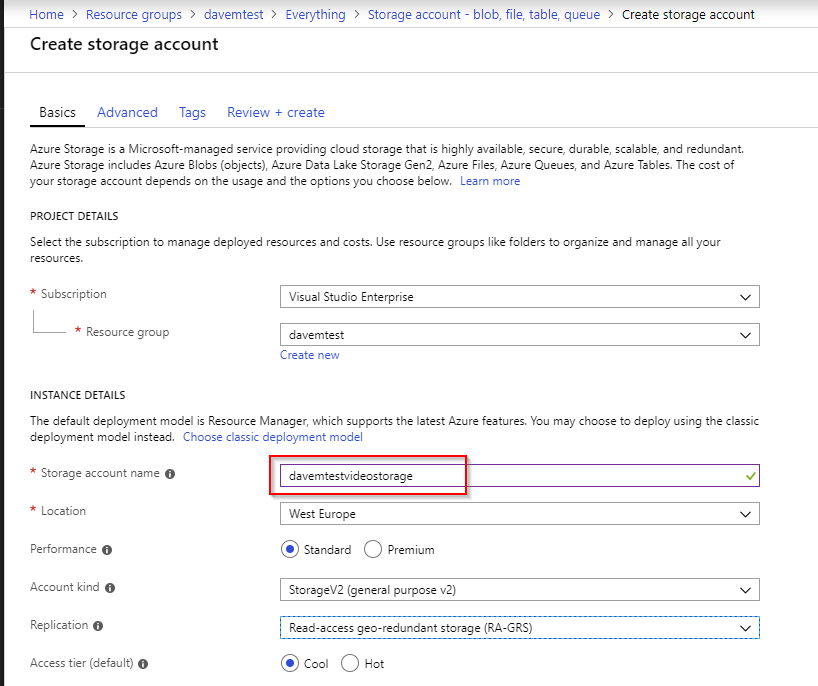

Now we can deploy Azure Functions we need to setup some storage to host some .mp4 video files:

Add a Storage account to the resource group

Handy overview of types of storage accounts. Essentially v2:

General-purpose v2 accounts deliver the lowest per-gigabyte capacity prices for Azure Storage

Handy storage pricing calculator here showing what hot/cool costs, and the different types of resilience:

Basically in order or cost and uptime using Storage V2 (general purpose v2)

- Locally-redundant storage (LRS)

- Geo-redundant storage (GRS)

- Read-access geo-redundant storage (RA-GRS)

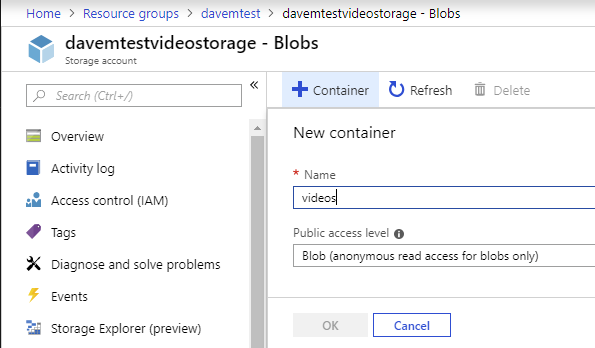

create video container and put anonymous read access on it.

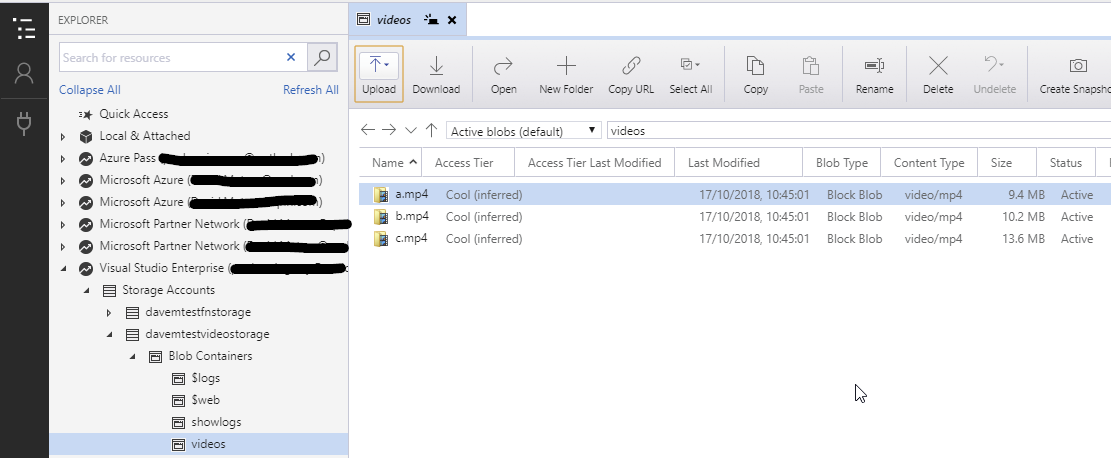

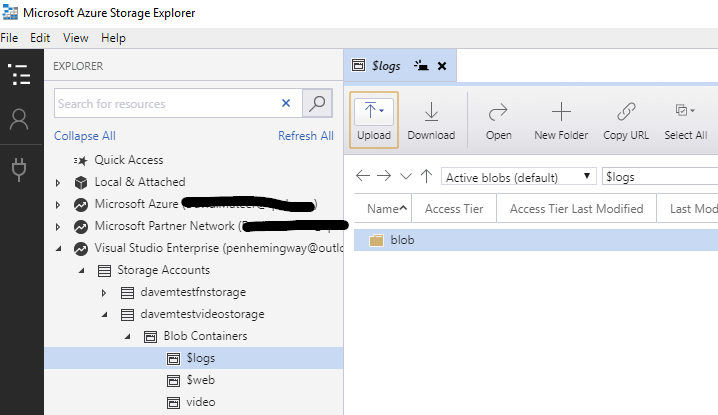

Use Azure Storage Explorer to put up some test videos.

https://davemtestvideostorage.blob.core.windows.net/videos/a.mp4 or whatever your blob storage is called, should now work. Seeking to a specific time in the video will not work more detail. Use this AzureBlobVideoSeekFix console app to update the offending namespace.

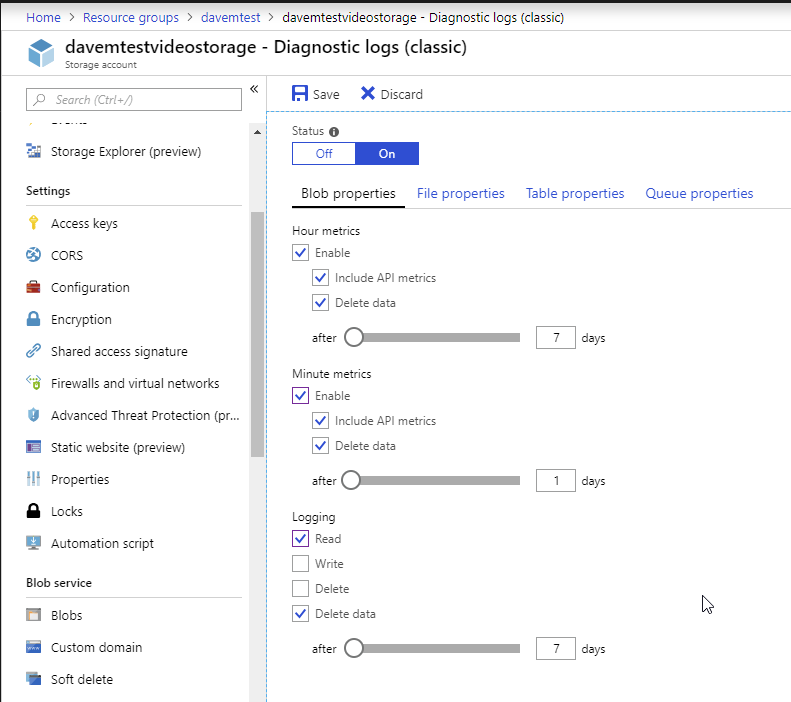

Turn on logging of downloads:

This will create a $logs folder only visible in Azure Storage Explorer.

This $logs folder holds the logs for each download from blob storage, so we just have to watch this folder with a Blob trigger (as the $logs files are blobs), then parse the file and store the data in a SQL Azure db.

The above strategy misses some downloads in high volume situations.

Copy Logs

So we will use a similar strategy to Chris Johnson and his source:

- Create a timer trigger function to copy $logs files to a new container called \showlogs

- Put a blob watcher on the \showlogs folder

It is useful to run the function locally to test it is working.

Full source

public static class CopyLogs

{

// RunOnStartup true so debugging easier both locally and live

[FunctionName("CopyLogs")]

public static void Run([TimerTrigger("0 */30 * * * *", RunOnStartup = true)] TimerInfo myTimer, ILogger log)

{

log.LogInformation($"C# CopyLogs Time trigger function executed at: {DateTime.Now}");

var container = CloudStorageAccount.Parse(GetEnvironmentVariable("DaveMTestVideoStorageConnectionString")).CreateCloudBlobClient().GetContainerReference("showlogs/");

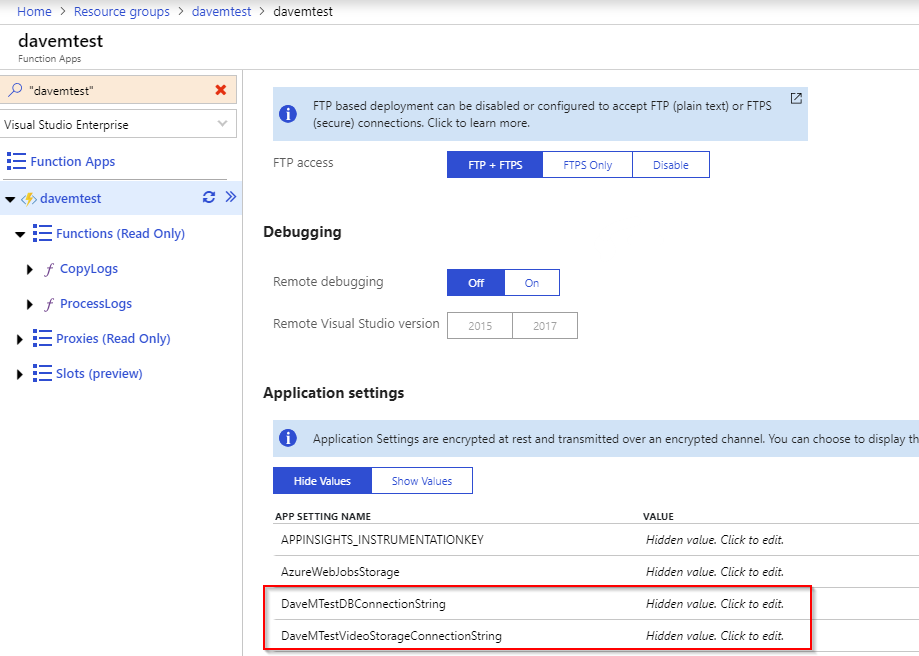

Part of the code showing how to get the connection string. For testing locally you’ll need a local.settings.json which isn’t uploaded to Azure Functions.

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"DaveMTestVideoStorageConnectionString": "DefaultEndpointsProtocol=https;AccountName=davemtestvideostorage;AccountKey=SECRETKEYHERE;EndpointSuffix=core.windows.net"

}

}

and on live:

So we should now be able to connect to storage on local and live.

It seems you are supposed to use App Settings for Storage Account connection strings, but Connection String for a SQL connection string as described in the Microsoft docs. I’ve found that app settings work for both.

Azure Functions Database gives more details on why Connection Strings may be better - possibly better integration with VS.

Setup DB and File Parsing

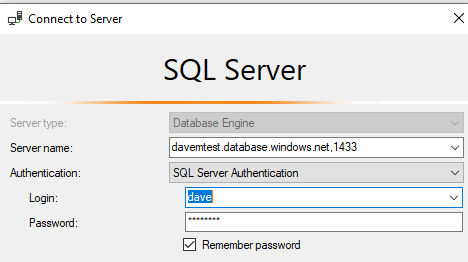

To hold the results of the file parsing I created a simple 5DTU SQL Azure database - the lowest powered db available.

connect to the db from SSMS

CREATE TABLE [dbo].[VideoDownloadLog](

[VideoDownloadLogID] [int] IDENTITY(1,1) NOT NULL,

[TransactionDateTime] [datetime] NOT NULL,

[OperationType] [nvarchar](255) NULL,

[ObjectKey] [nvarchar](255) NULL,

[UserAgent] [nvarchar](255) NULL,

[Referrer] [nvarchar](1024) NULL,

[FileName] [nvarchar](255) NULL,

[DeviceType] [nvarchar](255) NULL,

[IPAddress] [nvarchar](255) NULL,

[RequestID] [nvarchar](255) NULL,

[RequestStatus] [nvarchar](255) NULL,

CONSTRAINT [PK_VideoDownloadLog] PRIMARY KEY CLUSTERED

(

[VideoDownloadLogID] ASC

)WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ON [PRIMARY]

) ON [PRIMARY]

Put in a table to hold the data.

Now we have all the concepts to run the entire solutions which can be found here

Exceptions

Exceptions are caught and bubbled up to the Azure Portal UI

Retry 5 times if applicable and guidance on exceptions here

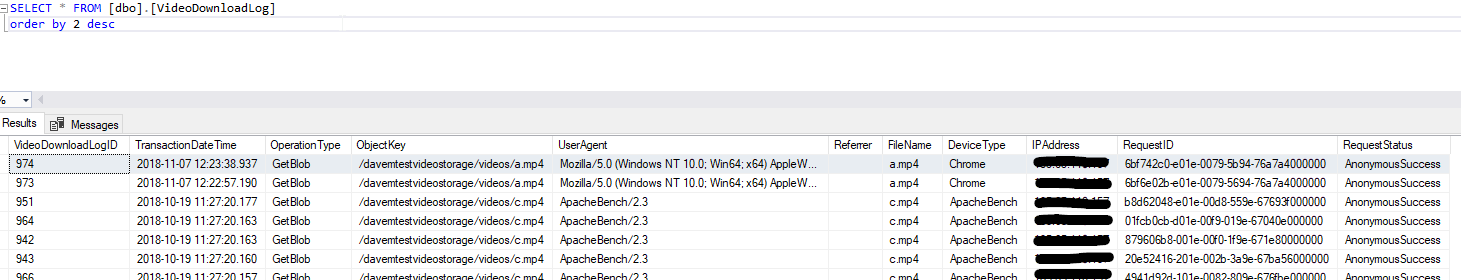

Report from the database

Now we have a granular log of who downloaded what and when. It is easy to create a nice report that the client can see every month.

Summary

- Azure Blob Storage to hold videos that can be streamed

- Setup logging on Blob Storage

- Copy logs every 5 minutes using an Azure Function

- Parse the logs into a database using an Azure Function

Azure Functions were an excellent fit for doing a task every 5 minutes, having a Blob storage watcher, parsing data and inserting into MSSQL.